According to eMarketer’s Worldwide OTT Video Viewers Forecast 2025, it is estimated that by 2025, the number of people watching digital video (streaming or downloaded content) at least once a month will exceed 3.64 billion, accounting for approximately 45% of the global population. Statista’s Number of Digital Video Viewers Worldwide from 2019 to 2023 report shows that this number had already surpassed 3.5 billion in 2023, with continued growth expected by 2025, primarily benefiting from increased internet penetration and growing use of mobile devices.

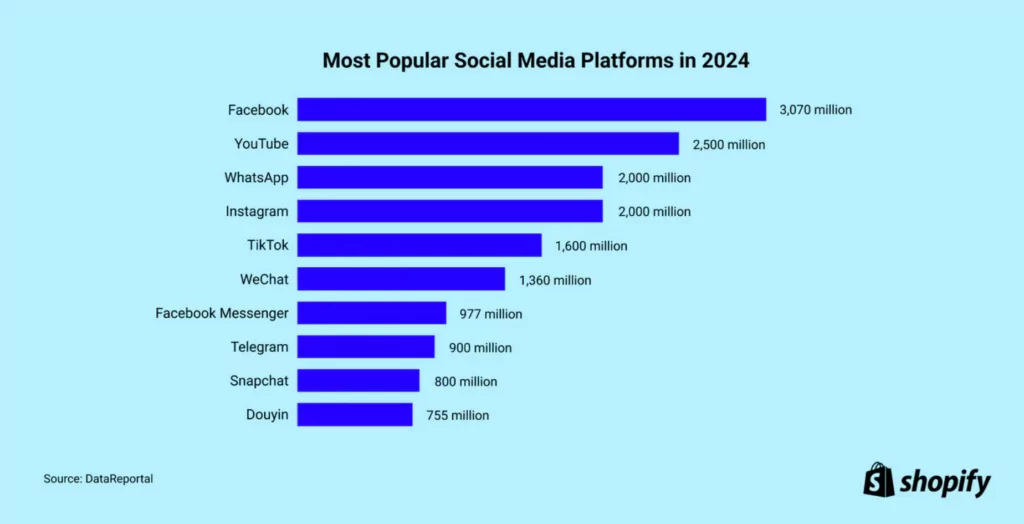

Meanwhile, short-form videos have rapidly gained popularity due to the promotion of platforms like TikTok, YouTube Shorts, and Instagram Reels. eMarketer’s 2025 forecast indicates that by 2025, the number of people watching short-form video content monthly will exceed 2.5 billion, a growth driven by mobile-first consumption trends and platform ease of use. Official TikTok data (ByteDance’s 2024 statement) claims that its global monthly active users exceeded 2 billion in 2024, with the majority watching short-form videos. YouTube Shorts, according to 2022 data, already had 1.5 billion monthly active viewers, reaching 70 billion daily views by 2024 (Zebracat’s Video Consumption Trends for 2025), with an expected user base exceeding 2 billion by 2025. Combined with Instagram Reels (Meta’s 2024 report states its apps have over 3 billion total monthly active users) and platforms like Snapchat, the global short-form video audience in 2025 is projected to be between 2.5 billion and 3 billion.

These industry reports and data demonstrate the vigorous development of the video industry. We are living in a new era of video. If the past was a web-based world, now everything is a video world. Behind this world are tens of millions of content creators contributing their talent and time.

According to Grand View Research’s Digital Content Creation Market Size and Share Report, 2030 (November 2024), the digital content creation market was valued at $32.28 billion in 2024, with an expected compound annual growth rate of 13.9% from 2025 to 2030, where video creators play a significant role. YouTube states that as of 2023, it has over 50 million content creators (channels) globally, with about 2 million participating in its Partner Program to earn revenue (Google Annual Report). TikTok reports over 1 billion active creators worldwide (ByteDance 2024 data), including occasional uploaders. Influencer Marketing Hub estimates that by 2025, the number of active content creators globally (regularly producing videos, including short-form content) will range between 100 million and 200 million, a growth attributed to low barriers to entry and monetization opportunities.

The number of content creators is so vast that it has sparked intense competition in the video and short-form video industries. Everyone hopes to produce video products with higher efficiency, better quality, and lower costs. Every day, this industry expands, and every day people search for better video creation tools to help them gain an advantage. Only by maintaining and securing an advantage can one survive long-term in this industry.

We surveyed the video tools market up to 2025, analyzing relevant internet search indices and randomly interviewing over 100 video creators to investigate their experiences with video generation tools and auxiliary tools. We identified nine mainstream video generation tools, all based on the latest AI technologies, serving as critical tools for current and next-generation video production. Globally, tens of millions of content creators are already using these tools. We will explain these video generation tools based on their suitable production scenarios and the characteristics of content creators they serve, accompanied by basic tutorials to help readers better understand their features.

By reading this article, you will gain a basic yet comprehensive understanding of the current mainstream video tools, which may help inspire you in content creation.

Synthesia

Synthesia is a synthetic media generation company established in 2017, headquartered in London, UK, dedicated to developing video generation tools using artificial intelligence technology to help users quickly create professional video content. Its core product, Synthesia Studio, is a software-as-a-service (SaaS) platform based on text-to-video technology, allowing users to generate videos with virtual avatars (AI avatars) without traditional video filming equipment or professional editing skills.

Synthesia’s customer base is extensive. As of January 2025, it includes over 60% of Fortune 100 companies, serving more than 60,000 clients worldwide. The tool is widely used in areas such as corporate internal communication, training videos, marketing advertisements, product demonstrations, and chatbots. The company, founded by Lourdes Agapito and Matthias Niessner, developed core deep-learning algorithms capable of generating realistic virtual avatars based on voice and facial movements.

In 2025, Synthesia raised $180 million in its Series D funding round, bringing its total funding to $330 million, doubling its valuation to $2.1 billion, making it the UK’s most valuable generative AI media company. The funding round was led by NEA, with new investors including World Innovation Lab (WiL) and Atlassian Ventures, with funds allocated for product innovation and expansion in North America, Europe, Japan, and Australia. In January 2025, former Amazon executive Peter Hill joined the company as Chief Technology Officer (CTO), further advancing its technological development.

Synthesia emphasizes responsible AI use, prohibiting the creation of unauthorized cloned content (e.g., celebrities or political figures) and implementing strict screening and content moderation mechanisms to prevent “deepfake” misuse. In 2024, the company became the world’s first AI enterprise to receive ISO/IEC 42001 AI Management Standard certification, highlighting its commitment to safety and compliance.

Product Features and Key Functions

Synthesia’s primary features are its efficiency, ease of use, and versatility, making it particularly suitable for businesses and individuals needing to generate video content quickly. Below are its core features and key functions:

Features:

- Realistic AI Avatars: Provides over 230 pre-set virtual avatars covering different genders, ages, and ethnicities; users can also create custom avatars (e.g., their own digital clones), supporting micro-expressions (e.g., nodding, frowning) to enhance realism.

- Multilingual Support: Supports over 140 languages and accents, equipped with a one-click translation function to automatically translate videos into other languages, suitable for global audiences.

- No Professional Equipment Needed: Requires no cameras, microphones, or studios; video production can be completed solely through a browser, reducing costs and time.

- Highly Customizable: Allows users to adjust avatar clothing colors, backgrounds, and brand elements (e.g., logos and fonts) to ensure video consistency with branding.

- Security and Compliance: Complies with SOC 2 Type II and GDPR standards, with transparent data handling, emphasizing AI safety and privacy protection.

Key Functions:

- Text-to-Video Generation: After inputting a script, AI automatically generates a video with an avatar narration, driven by text-to-speech technology.

- AI Video Assistant: Supports uploading documents (PDF, PPT, Word, etc.), URLs, or direct prompt input to quickly generate video outlines and scripts.

- Screen Recording: Built-in AI screen recording tool for capturing tutorials or demo content, with automatic transcription of voice into scripts.

- Media Library and Customization: Offers millions of royalty-free images, videos, icons, and music, with the option for users to upload their own materials.

- Real-Time Collaboration: Teams can edit videos in real-time within a shared workspace, speeding up the creation process.

- Interactive Player: Videos support automatic adaptation to the viewer’s language, with plans to introduce hotspots, forms, and personalized call-to-action features.

- Expressive Avatars: Launched in 2024, fourth-generation avatars can automatically adjust expressions and tones based on script emotions, such as frowning for sadness or smiling for excitement.

Basic Tutorial: How to Operate Synthesia

Below are the basic steps for using Synthesia to create a video, suitable for beginners to get started quickly:

Step 1: Register and Log In

- Visit the official website synthesia.io.

- Click “Get Started” or “Sign Up” to register an account (offers free trial and paid plans such as Starter or Enterprise).

- Log in to enter the Synthesia Studio main interface.

Step 2: Create a New Video

- On the homepage, click “New Video” in the top-right corner.

- Choose a generation method:

- From Scratch: Blank project, input your own script.

- AI-Generated: Click “Generate video with AI,” enter a prompt, upload a document, or provide a URL for AI to generate a script and outline.

- Template: Select from over 300 templates (e.g., training, marketing) for a quick start.

- If using the AI assistant, input details like goal, audience, and language, click “Create outline” to generate an outline, review it, then click “Create video.”

Step 3: Edit the Video

- Input Script: Paste text into the script box scene-by-scene, with each new paragraph corresponding to a new scene.

- Select Avatar: Choose from over 230 pre-set avatars or create a custom avatar (requires a higher-tier plan).

- Adjust Voice: Select a language and voice (over 140 options), with adjustable speed or tone.

- Add Visual Elements: Modify backgrounds (colors, images, or videos), layouts, and transition effects (16 options) in the right panel, or import materials from Shutterstock/Unsplash.

- Brand Customization: Upload logos, fonts, and colors to ensure brand consistency.

- Preview: Click “Preview” to check the effect and adjust details.

Step 4: Generate and Share

- Once confirmed, click “Generate” to produce the video (typically takes a few minutes, depending on length).

- After generation, download (MP4 format), share a link, or embed it on a website.

- For adjustments, click “Edit” to modify the script or elements and regenerate.

Tips:

- Script Optimization: Keep it concise and clear, avoiding complex terms to ensure natural narration.

- Frequent Previews: Preview after editing each segment to ensure smooth transitions and animations.

- Minute Limits: Note the video minute limits of your subscription plan (e.g., Starter plan offers 120 minutes annually) and confirm before generating.

Who Synthesia Is Suitable For

Enterprise Users

- Use Case: Internal communication, employee training, product demonstrations, customer support videos.

- Reason: Synthesia offers over 230 realistic AI avatars and supports over 140 languages, enabling enterprises to quickly generate professional videos without filming equipment or hiring actors. Its brand customization features (logos, colors, fonts) also ensure videos align with corporate identity.

- Typical Users: Human resources teams, marketing departments, customer service teams.

Educators and Trainers

- Use Case: Online courses, instructional videos, employee onboarding training.

- Reason: The tool supports multiple languages and automatic translation, making it easy to produce educational content for global audiences. Screen recording and PPT-to-video functions also facilitate converting existing materials into dynamic narrated videos.

- Typical Users: University professors, corporate trainers, online education platform content creators.

Marketers

- Use Case: Advertising campaigns, social media promotions, product introduction videos.

- Reason: Synthesia’s efficiency and template support allow marketers to create eye-catching videos in a short time. AI avatars can simulate real-person narration, enhancing viewer trust.

- Typical Users: Digital marketing specialists, brand managers, e-commerce sellers.

Small and Medium Business Owners

- Use Case: Low-cost video content production, website-embedded videos.

- Reason: For small businesses with limited budgets, Synthesia produces high-quality videos without requiring professional equipment or teams, offering high cost-effectiveness.

- Typical Users: Startup founders, individual e-commerce operators.

Content Creators (Specific Needs)

- Use Case: Tutorial videos, podcast visualization, standardized narration content.

- Reason: Although Synthesia’s creative flexibility is less than tools like Runway, its simple operation and multilingual support suit creators needing quick “talking head” videos.

- Typical Users: Educational YouTubers, podcasters (needing video versions).

Not Suitable For:

- Highly Creative-Oriented Artists: Synthesia’s output leans toward standardization, lacking the artistic generation capabilities of tools like Runway or Kaiber.

- Creators Needing Complex Dynamic Scenes: The tool focuses primarily on AI avatar narration, not excelling at generating narrative-driven or complex animated videos.

- Budget-Constrained Individual Users: Despite offering a free trial, its paid plans (starting at $22/month) may be slightly expensive for some independent creators.

Fliki

Fliki is an AI-driven video generation tool designed to help users quickly convert text into video content with realistic voices and rich visual effects. It was developed by Nine Thirty Five, a company founded in 2021, headquartered in Delaware, USA. Fliki emerged from the need to improve content creation efficiency, particularly in the context of rapidly growing social media and digital marketing, where traditional video production methods are often time-consuming and costly. Fliki significantly lowers this barrier through AI technology.

As of 2025, Fliki has grown into a globally recognized AI video generation platform, collaborating with 73% of Fortune 500 companies and serving content creators, businesses, and educators. The company focuses on providing simple, user-friendly tools while ensuring data security, complying with privacy regulations such as GDPR and CCPA. Fliki operates on a subscription model, offering a free trial and multiple paid plans, aiming to enable users to create high-quality videos without professional skills.

Product Features and Key Functions

Fliki stands out for its user-friendliness and versatility. Below are its core features and key functions:

Features:

- Realistic AI Voices: Offers over 2,000 ultra-realistic text-to-speech (TTS) options, supporting more than 80 languages and 100+ dialects, with natural and fluent voices.

- Rich Media Library: Built-in with millions of royalty-free images, video clips, and background music; users can also upload custom materials.

- Multi-Purpose Generation: Supports video generation from text, blogs, URLs, and even tweets, adapting to various content needs.

- Fast and Efficient: Requires no complex editing skills, enabling video creation within minutes.

- Brand Customization: Supports adding brand logos, fonts, and colors to ensure content consistency.

Key Functions:

- Text-to-Video Conversion: Input a script or prompt, and Fliki automatically generates a video with voice and visuals.

- AI Voice Cloning: Users can upload audio samples to generate personalized voices (requires a premium plan).

- AI Avatars: Provides virtual character options for narration or presentation content.

- Content Repurposing Tool: Quickly converts blog posts, PPTs, or product pages into videos.

- Social Media Optimization: Supports formats for platforms like YouTube, TikTok, and Instagram.

- Subtitles and Translation: Automatically generates subtitles and supports one-click translation into multiple languages.

- Export Options: Videos can be exported as MP4 files for easy sharing or embedding.

Basic Tutorial: How to Operate Fliki

Below are the basic steps for using Fliki to create a video, suitable for beginners to get started quickly:

Step 1: Register and Log In

- Visit the official website fliki.ai.

- Click “Sign Up” or “Get Started” to register an account using Google, Apple, or email.

- Log in to enter the main interface; the free plan offers 5 minutes of generation credit per month.

Step 2: Create a New Project

- Click the “New File” button on the left panel and select “Video” type.

- Input content source:

- Manual Input: Directly paste a script or describe video ideas.

- Import Content: Paste a blog URL, product page link, or upload a PPT.

- Template: Choose from preset templates (e.g., tutorials, ads).

- Click “Next” to enter the editing interface.

Step 3: Edit the Video

- Script Adjustment: Edit text in the script box, with each paragraph corresponding to a scene.

- Select Voice: Choose from over 2,000 voices (filterable by language and gender), adjusting speed or tone.

- Add Visuals: Select “AI Media” to generate images, or pick videos/images from the stock library, or upload your own materials.

- Customize Elements: Add background music (from the library or uploaded), subtitles, and brand logos.

- Preview: Click “Preview” to check the effect, adjusting scene order or duration.

Step 4: Generate and Export

- Once confirmed, click “Export” to generate the video (free version includes a watermark; paid version does not).

- Download the MP4 file or share directly to social media.

- For adjustments, return to the editing interface, modify, and regenerate.

Tips:

- Keep Scripts Concise: Avoid lengthy sentences to ensure natural narration.

- Use Short Segments: 10-15 seconds per scene for easier adjustments later.

- Check Synchronization: Preview to ensure voice aligns with visuals.

Who Fliki Is Suitable For

Fliki’s functional design makes it suitable for various user groups. Below are its primary target audiences:

Content Creators

- Use Case: YouTube videos, TikTok short videos, podcast visualizations.

- Reason: Supports quickly converting text or ideas into videos, with a rich media library and voice options enhancing content appeal.

- Typical Users: Independent vloggers, social media influencers.

Marketers and Business Owners

- Use Case: Product demonstrations, promotional videos, internal communications.

- Reason: Enables branded video creation without a professional team, with multilingual support suitable for global promotion.

- Typical Users: E-commerce sellers, digital marketing specialists, small and medium business owners.

Educators

- Use Case: Online courses, training materials, student projects.

- Reason: Can quickly convert educational content into videos, with subtitles and translation facilitating multilingual teaching.

- Typical Users: Teachers, course developers.

Budget-Constrained Beginners

- Use Case: Personal projects, simple advertisements.

- Reason: Free plan offers basic functions, with simple operation requiring no technical background.

- Typical Users: Students, freelancers.

Teams Needing Globalization

- Use Case: Cross-border promotion, employee training.

- Reason: Supports over 80 languages and automatic translation, ideal for content targeting international audiences.

- Typical Users: Multinational corporations, international nonprofits.

Not Suitable For:

- Professional Video Editors: Fliki’s customization options are limited, potentially insufficient for users needing complex effects or precise editing.

- Extreme Creativity Seekers: Compared to tools like Runway, Fliki’s generation leans toward standardization, with less artistic flair.

HeyGen

HeyGen is an AI-driven video generation tool designed to help users quickly create professional videos through simple text input, without requiring traditional filming equipment or complex editing skills. It was developed by HeyGen, a company founded in 2020, headquartered in Los Angeles, California, USA. HeyGen’s founders include Joshua Xu and Wayne Liang, both Carnegie Mellon University graduates. Joshua Xu worked as a software engineer at Snapchat for six years, focusing on AI camera technology, while Wayne Liang served as a product design lead at Smule and ByteDance (TikTok’s parent company). Together, they aim to apply AI technology to video content creation, proposing the vision that “AI will become the new camera.”

As of March 2025, HeyGen has become one of the leading platforms in the AI video generation field, rated by G2 as the best AI video generation tool of 2025 (4.8/5). The company’s team is distributed across Los Angeles, Toronto, San Francisco, and Palo Alto, with approximately 42 employees. HeyGen has secured multiple rounds of funding and serves global enterprise clients, including Amazon and Pfizer, with wide applications in marketing, education, and social media. The company emphasizes efficiency, cost-effectiveness, and multilingual support, striving to make video creation more accessible.

Product Features and Key Functions

HeyGen stands out for its powerful AI technology and user-friendly design. Below are its core features and key functions:

Features:

- Realistic AI Avatars: Offers over 100 virtual avatars covering different ethnicities, ages, and genders; users can also create custom avatars (e.g., personal digital clones).

- Multilingual Support: Supports 175 languages and dialects, equipped with AI translation and lip-sync technology, suitable for global content dissemination.

- Efficient Generation: Generates high-quality videos in minutes without cameras or actors, reducing time and costs.

- Highly Customizable: Allows adjustments to avatar clothing, backgrounds, voice styles, and integration of brand elements.

- Ease of Use: Intuitive interface, accessible without prior video editing experience.

Key Functions:

- Text-to-Video Generation: Input a script, and AI automatically generates a video with avatars and voice.

- AI Voice Cloning: Upload audio samples to generate personalized voices, enhancing realism.

- Video Translation: Translates video content into 175 languages, maintaining voice and lip-sync accuracy.

- Template Library: Provides over 300 preset templates for marketing, education, and social media scenarios.

- Instant Avatar: Generates a user’s AI avatar from a 5-minute video recording.

- Multi-Scene Editing: Supports multi-character, multi-scene video production for richer content.

- Social Media Optimization: Supports various video formats compatible with platforms like YouTube and TikTok.

Basic Tutorial: How to Operate HeyGen

Below are the basic steps for using HeyGen to create a video, suitable for beginners to get started quickly:

Step 1: Register and Log In

- Visit the official website heygen.com.

- Click “Get Started” or “Sign Up” to register using an email or Google account.

- Log in to enter the main interface; the free plan offers 1 minute of video generation credit per month.

Step 2: Create a New Video

- On the homepage, click “Create Video” or “New Project.”

- Choose a generation method:

- From Scratch: Blank project, input your own script.

- Template: Select from over 300 templates (e.g., product demos, educational videos).

- Upload Content: Import PPT, PDF, or URL for AI to generate a script.

- Click “Next” to enter the editing interface.

Step 3: Edit the Video

- Input Script: Enter or paste a script in the text box, with each paragraph corresponding to a scene.

- Select Avatar: Choose from over 100 avatars or create a custom one (requires uploading a 2-5 minute video; premium plans support 4K).

- Adjust Voice: Select a language and voice (over 300 options), adjusting speed or pitch.

- Add Visual Elements: Choose backgrounds, images, videos, or music from the left panel, or upload custom materials.

- Brand Customization: Add logos, colors, or fonts.

- Preview: Click “Preview” to check the effect and adjust details.

Step 4: Generate and Share

- Once confirmed, click “Generate” (typically takes a few minutes).

- Download the MP4 file (free version includes a watermark; paid version does not) or share directly to social media.

- For adjustments, return to the editing interface, modify, and regenerate.

Tips:

- Concise Scripts: Short sentences work best, avoiding complex grammar.

- Preview Checks: Ensure voice aligns with visuals.

- Save Progress: Save periodically during editing to avoid interruptions.

Who HeyGen Is Suitable For

HeyGen’s features and design make it suitable for the following groups:

Content Creators

- Use Case: Social media videos (TikTok, YouTube), vlogs, tutorials.

- Reason: Rich templates and fast generation suit creators needing frequent content updates, with multilingual support attracting global viewers.

- Typical Users: YouTubers, social media influencers.

Enterprise Users

- Use Case: Employee training, product promotion, sales demos.

- Reason: Efficiently generates professional videos, with brand customization and multilingual translation suitable for multinational companies.

- Typical Users: Marketing teams, HR departments, startups.

Educators

- Use Case: Online courses, instructional videos, knowledge sharing.

- Reason: Quickly converts PPTs or scripts into videos, with AI avatars enhancing interactivity for remote education.

- Typical Users: Teachers, trainers, educational platform developers.

Marketers

- Use Case: Ad videos, social media promotion, personalized client communication.

- Reason: Supports personalized video generation to boost brand exposure, with translation aiding global marketing.

- Typical Users: Digital marketing specialists, e-commerce sellers.

Budget-Constrained Beginners

- Use Case: Personal projects, simple promo videos.

- Reason: Free plan offers basic functions, with simple operation suitable for users without technical backgrounds.

- Typical Users: Students, freelancers, small business owners.

Not Suitable For:

- Professional Filmmakers: Lacks advanced post-production features (e.g., effects, precise editing) needed for high-precision control.

- Extreme Creativity Seekers: Compared to Runway, HeyGen’s output leans toward standardization, with less artistic depth.

Sora (OpenAI)

Sora is a text-to-video generation model developed by OpenAI, designed to generate high-quality video content from simple text prompts. OpenAI was founded in 2015, headquartered in San Francisco, California, USA, by co-founders including Elon Musk and Sam Altman. Initially a nonprofit focused on AI research and application, it later transitioned into a capped-profit company. OpenAI is renowned for its generative AI models like ChatGPT and DALL·E, aiming to advance artificial general intelligence (AGI).

Sora was first previewed to the public on February 15, 2024, demonstrating its ability to generate up to 60-second videos from text, garnering widespread attention. After nearly 10 months of optimization, OpenAI officially released Sora Turbo on December 9, 2024—a faster, more efficient version—available as a standalone product to ChatGPT Plus and Pro users. The name “Sora” comes from the Japanese word for “sky,” symbolizing its “limitless creative potential.” As of March 2025, the tool has been gradually rolled out globally (excluding parts of Europe), becoming a benchmark in AI video generation. OpenAI collaborated with safety testers (red team) and creative professionals during development to ensure the model’s safety and practicality while addressing ethical challenges like misinformation and copyright issues.

Product Features and Key Functions

Sora stands out among AI video tools for its powerful generation capabilities and flexibility. Below are its core features and key functions:

Features:

- High-Quality Video Generation: Supports 1080p resolution videos up to 20 seconds long (Pro users), with detailed visuals and smooth motion.

- Multimodal Input: Supports not only text prompts but also generation or extension based on images or existing videos.

- Physical World Understanding: The model simulates real-world dynamics, such as object movement and light-shadow changes, maintaining consistency.

- Diverse Styles: Offers preset styles (e.g., cinematic, cartoon, paper craft) to meet varied creative needs.

- Safety Design: Generated videos include C2PA metadata tags to prevent misuse, with all content reviewed.

Key Functions:

- Text-to-Video Generation: Input descriptive text to generate a video matching the prompt.

- Image Animation: Converts static images (e.g., DALL·E creations) into dynamic videos.

- Video Extension and Editing:

- Remix: Adjusts styles or elements of existing videos.

- Re-cut: Edits or extends video segments.

- Blend: Merges multiple video elements.

- Loop: Creates seamless looping videos.

- Storyboard: Combines multiple clips via a timeline.

- Multilingual Support: Generated content adapts to prompts in different languages, enhancing global applicability.

- Creative Exploration: Built-in “Featured Feed” showcases excellent works for user reference and inspiration.

Basic Tutorial: How to Operate Sora

Below are the basic steps for using Sora to create a video, suitable for beginners to get started quickly:

Step 1: Register and Log In

- Ensure you have a ChatGPT Plus ($20/month) or Pro ($200/month) subscription.

- Visit sora.com and log in with your OpenAI account.

- On first entry, input your birthdate to confirm age and agree to the media upload terms.

Step 2: Create a New Video

- Click “Start Now” or “Create Video” on the homepage.

- Choose an input method:

- Text Prompt: Describe a scene in the input box (e.g., “A woman in a red dress walking confidently on a city street at night”).

- Upload Material: Click “+” to upload an image or video as a starting point.

- Set parameters: Select resolution (480p/720p/1080p), duration (up to 20 seconds), and aspect ratio (e.g., 16:9 or 9:16).

Step 3: Edit the Video

- Refine Prompt: Add details (e.g., colors, lighting, mood) to improve generation accuracy.

- Select Style: Choose from presets (e.g., “cinematic” or “cartoon”) or keep the default.

- Preview Adjustment: Click “Preview” to view the draft, tweaking the prompt or materials.

- Storyboard (Optional): Enter timeline mode and drag cards to arrange multi-scene sequences.

Step 4: Generate and Share

- Click “Generate” and wait for generation (about 1 minute, depending on complexity and traffic).

- View results in “Library,” downloadable as MP4 (Plus version with watermark; Pro version without) or shareable.

- Edit iteratively using Remix, Blend, etc., to optimize the video.

Tips:

- Prompt Tips: Specific descriptions (e.g., “A motorcycle speeding through a desert under a blue sky”) yield better results than vague ones.

- Check Status: Peak times may slow generation; check OpenAI’s status page.

- Experiment with Styles: Try presets to find the right creative expression.

Who Sora Is Suitable For

Sora’s functional design suits various user groups. Below are its primary target audiences:

Content Creators

- Use Case: YouTube shorts, TikTok creative videos, animated content.

- Reason: Quickly generates visual content with diverse styles, ideal for frequent updates.

- Typical Users: Vloggers, social media influencers, animators.

Marketers

- Use Case: Ad videos, product promotions, personalized client videos.

- Reason: Efficiently produces eye-catching content, with multilingual support aiding global outreach.

- Typical Users: Digital marketing specialists, brand managers.

Educators

- Use Case: Instructional videos, historical reenactments, scientific simulations.

- Reason: Converts text or images into dynamic content, enhancing teaching interactivity.

- Typical Users: Teachers, course developers.

Creative Professionals

- Use Case: Proof-of-concept, short film previews, artistic experiments.

- Reason: Supports image animation and style tweaking, ideal for rapid creative validation.

- Typical Users: Filmmakers, designers, artists.

Enterprise Users

- Use Case: Internal training, customer support videos, corporate promotions.

- Reason: Generates professional videos without equipment, saving costs.

- Typical Users: Small business owners, HR teams.

Not Suitable For:

- Professional Post-Editors: Lacks fine-grained editing features compared to traditional software.

- Extremely Budget-Constrained Users: Requires a ChatGPT Plus/Pro subscription, unfriendly to non-paying users.

- Surreal Creativity Seekers: Compared to Runway, Sora leans toward realism.

DeepBrain AI

DeepBrain AI is an AI-powered video generation tool designed to quickly create video content with realistic AI avatars from text input. It was developed by DeepBrain AI, a company founded in 2016, headquartered in Seoul, South Korea. Initially focused on virtual humans (AI Human) and conversational AI technology, DeepBrain AI aims to apply AI to video generation and customer interaction. The company’s founder, CEO Jang Se-young, led the team to develop core deep-learning algorithms, creating highly realistic digital humans.

As of March 2025, DeepBrain AI is a global leader in AI video generation, serving over 70% of Fortune 500 companies, including Amazon, Lenovo, and NEC. In 2021, it completed a $44 million Series B funding round led by Korea Development Bank, with an office in Palo Alto, California (540 University Ave., Suite 200, Palo Alto, CA 94301). DeepBrain AI has won CES Innovation Awards and holds 148 AI-related patents, showcasing its technical prowess. The company also offers Deepfake detection solutions, emphasizing responsible AI use.

Product Features and Key Functions

DeepBrain AI is renowned for its efficient video generation and realistic AI avatars. Below are its core features and key functions:

Features:

- Ultra-Realistic AI Avatars: Offers over 100 lifelike virtual avatars covering various ages, ethnicities, and professions, with natural movements and expressions.

- Multilingual Support: Provides text-to-speech (TTS) in over 80 languages with natural accents and tones, suitable for global users.

- Fast Generation: Produces professional videos in minutes without filming equipment, reducing costs and time.

- Customization Options: Allows adjustments to avatar appearance, clothing, backgrounds, and brand elements.

- Security: Complies with GDPR and SOC 2 standards, prioritizing data privacy and content moderation.

Key Functions:

- Text-to-Video Generation: Input a script, and AI generates a video with avatars and voice.

- AI Voice Cloning: Upload audio samples to create personalized voices.

- Document-to-Video: Quickly converts PPTs, PDFs, etc., into videos with auto-generated scripts and narration.

- ChatGPT Integration: Built-in ChatGPT assists with script generation, boosting creation efficiency.

- Multi-Scene Support: Allows multiple avatars and scenes in a video for diverse content.

- Subtitles and Translation: Automatically generates subtitles with multilingual translation support.

- Template Library: Offers over 65 preset templates for education, marketing, and more.

Basic Tutorial: How to Operate DeepBrain AI

Below are the basic steps for using DeepBrain AI to create a video, suitable for beginners to get started quickly:

Step 1: Register and Log In

- Visit the official website aistudios.com.

- Click “Get Started” or “Sign Up” to register using an email or Google account.

- Log in to enter the AI Studios main interface; the free trial offers 1 minute of generation credit per month.

Step 2: Create a New Video

- On the homepage, click “New Project” or “Create Video.”

- Choose a generation method:

- Text Input: Directly input a script.

- Document Upload: Upload a PPT or PDF for AI to generate a video draft.

- Template: Select from over 65 templates (e.g., company intros, tutorials).

- Click “Next” to enter editing mode.

Step 3: Edit the Video

- Script Editing: Input or adjust the script in the text box, with each paragraph corresponding to a scene.

- Select Avatar: Choose from over 100 avatars or create a custom one (requires uploading a photo or video; premium plans supported).

- Voice Settings: Select a language and voice (over 80 options), adjusting speed or tone.

- Visual Adjustments: Add backgrounds (colors, images, videos), music, or subtitles, or upload custom materials.

- Branding: Add logos, fonts, or colors.

- Preview: Click “Preview” to check the effect and adjust details.

Step 4: Generate and Export

- Once confirmed, click “Generate” (typically takes a few minutes).

- Download the MP4 file (free version with watermark; paid version without) or share via link.

- For adjustments, return to the editing interface, modify, and regenerate.

Tips:

- Script Optimization: Use concise language, avoiding complex sentences.

- Material Prep: Prepare brand elements or custom materials in advance.

- Voice Check: Preview to ensure narration syncs with visuals.

Who DeepBrain AI Is Suitable For

DeepBrain AI’s features suit various user groups. Below are its primary target audiences:

Enterprise Users

- Use Case: Employee training, company intros, customer support videos.

- Reason: Efficiently generates professional videos, with multilingual support and branding suitable for multinational firms.

- Typical Users: HR teams, marketing departments, startups.

Content Creators

- Use Case: YouTube videos, social media content, podcast visualizations.

- Reason: Quickly generates avatar-led videos, with templates and voice options boosting appeal.

- Typical Users: Vloggers, podcasters.

Educators

- Use Case: Online courses, instructional demos, knowledge sharing.

- Reason: Document-to-video simplifies teaching content creation, with multilingual support for global students.

- Typical Users: Teachers, trainers.

Marketers

- Use Case: Ad campaigns, product demos, personalized marketing.

- Reason: Supports rapid creation of engaging videos, with translation aiding global outreach.

- Typical Users: Digital marketing specialists, e-commerce sellers.

Budget-Constrained Beginners

- Use Case: Personal projects, low-cost promotions.

- Reason: Free trial and simple operation suit users without technical backgrounds.

- Typical Users: Students, freelancers.

Not Suitable For:

- Professional Filmmakers: Lacks advanced post-production features compared to traditional software.

- Extreme Creativity Seekers: Output leans toward standardization, less artistic than Runway.

Colossyan

Colossyan is an AI-powered video generation tool designed to quickly create video content with realistic AI avatars from text input. It was developed by Colossyan, a company founded in 2020, headquartered in Berlin, Germany. Founders include CEO Kristof Szabo, Chief Product Officer Dominik Mate Kovacs, and CTO Zoltan Kovacs, who aim to apply AI to video production, addressing the high costs and time demands of traditional methods.

The company gained attention quickly after its founding, securing €1 million in seed funding in July 2021, led by Hungary’s Day One Capital, with participation from early-stage VC APX (backed by Axel Springer and Porsche) and angel investors Mikal Hallstrup (Designit founder) and Akos Kapui (Shapr3D VP of Engineering). As of March 2025, Colossyan serves clients across industries worldwide, including the New Mexico State Government and Accenture, focusing on workplace learning and corporate training. The company emphasizes efficiency, ease of use, and multilingual support, aiming to make video creation more inclusive.

Product Features and Key Functions

Colossyan is noted for its focus on workplace learning and user-friendly design. Below are its core features and key functions:

Features:

- Realistic AI Avatars: Offers over 150 virtual avatars covering different ages, ethnicities, and professions, with natural expressions and movements.

- Multilingual Support: Supports over 70 languages with auto-translation and multiple accent options, suitable for global audiences.

- Efficient Generation: Completes video production in minutes without equipment or skills.

- Brand Customization: Supports uploading brand logos, colors, and fonts for consistent video branding.

- Collaboration: Offers team collaboration features similar to shared document workflows, ideal for multi-user editing.

Key Functions:

- Text-to-Video Generation: Input a script, and AI generates a video with avatars and voice.

- Document-to-Video: Converts PDFs and PPTs into dynamic videos with auto-generated scripts and narration.

- AI Script Assistant: Uses GPT-3 to generate scripts, optimize text, or fix grammar.

- Multi-Character Dialogue: Supports multiple avatars conversing in a single scene, ideal for scenario-based training.

- Auto-Translation and Subtitles: One-click translation and subtitle generation enhance accessibility.

- Template Library: Offers dozens of preset templates for training, marketing, and explainer videos.

- Media Enhancement: Supports adding stock images, videos, music, or custom uploads.

Basic Tutorial: How to Operate Colossyan

Below are the basic steps for using Colossyan to create a video, suitable for beginners to get started quickly:

Step 1: Register and Log In

- Visit the official website colossyan.com.

- Click “Get Started” or “Sign Up” to register using an email or Google account.

- Log in to enter the main interface; the free trial offers a 14-day experience with partial features.

Step 2: Create a New Video

- On the homepage, click “New Draft” or “Create Video.”

- Choose a generation method:

- Text Input: Directly input a script.

- Document Import: Upload a PDF or PPT (max 10MB) for AI to generate a video draft.

- Template: Select from preset templates (e.g., training, marketing).

- Click “Next” to enter the editing interface.

Step 3: Edit the Video

- Script Editing: Input or adjust text in the script box, with each paragraph corresponding to a scene.

- Select Avatar: Choose from over 150 avatars, adjusting clothing, position, or expressions.

- Voice Settings: Select a language and voice (over 600 options), adjusting speed or tone.

- Visual Adjustments: Add backgrounds (stock or custom), images, music, or subtitles.

- Branding: Upload logos and set brand colors (enterprise version supported).

- Preview: Click “Preview” to check the effect and adjust details.

Step 4: Generate and Export

- Once confirmed, click “Generate” (takes about a few minutes).

- Download the MP4 file (free version with watermark; paid version without) or share via link.

- For adjustments, return to the editing interface, modify, and regenerate.

Tips:

- Concise Scripts: Short sentences sound more natural, avoiding complex words.

- Multilingual Testing: Check subtitle accuracy after translation.

- Team Collaboration: Invite team members to co-edit for efficiency.

Who Colossyan Is Suitable For

Colossyan’s functional design suits the following groups:

Enterprise Users

- Use Case: Employee training, internal communication, customer support videos.

- Reason: Efficiently generates professional videos, with collaboration and branding features meeting enterprise needs.

- Typical Users: HR teams, marketing departments, startups.

Educators

- Use Case: Online courses, instructional videos, onboarding training.

- Reason: Document-to-video and multilingual support simplify teaching content creation.

- Typical Users: Teachers, trainers, educational platform developers.

Marketers

- Use Case: Ad campaigns, product demos, social media content.

- Reason: Quickly produces engaging videos, with translation aiding global outreach.

- Typical Users: Digital marketing specialists, brand managers.

Content Creators

- Use Case: Tutorial videos, knowledge sharing, short videos.

- Reason: Simple operation and templates support rapid content production.

- Typical Users: YouTubers, social media influencers.

Budget-Constrained Beginners

- Use Case: Personal projects, low-cost promotions.

- Reason: Free trial and intuitive interface suit users without technical backgrounds.

- Typical Users: Students, freelancers.

Not Suitable For:

- Professional Filmmakers: Lacks complex editing and effects features.

- Extreme Creativity Seekers: Output leans toward standardization, less artistic than Runway.

Runway

Runway is developed by Runway AI, Inc. (also known as Runway or RunwayML), a U.S. company founded in 2018, headquartered in New York City. Its founders—Cristóbal Valenzuela from Chile, Alejandro Matamala, and Anastasis Germanidis from Greece—met and collaborated at NYU’s Tisch School of the Arts Interactive Telecommunications Program (ITP). The company focuses on generative AI research and technology, developing tools for generating videos, images, and multimedia content.

Runway is renowned for its innovation in AI video generation, launching commercial models like Gen-1, Gen-2, and Gen-3 Alpha. In December 2022, it raised $50 million in a Series C round, followed by a $141 million Series C extension in June 2023 at a $1.5 billion valuation, with investors including Google, NVIDIA, and Salesforce. Runway also collaborated with Stability AI to develop the popular Stable Diffusion image generation model, showcasing its influence in generative AI. As of March 2025, Runway was named one of Time magazine’s 100 most influential companies, with its tools used in films like Everything Everywhere All at Once, music videos by A$AP Rocky and Kanye West, and editing for The Late Show with Stephen Colbert.

Runway’s mission is to “shape the next era of art, entertainment, and human creativity” through AI, with products widely applied in filmmaking, post-production, advertising, and visual effects.

Product Features and Key Functions

Runway is distinguished by its powerful multimodal generation and rich editing tools. Below are its core features and key functions:

Features:

- Multimodal Generation: Supports text, image, and video inputs to generate diverse video content.

- High-Quality Output: Gen-3 Alpha model delivers high-fidelity, smooth-motion videos.

- Creative Control: Offers multiple style presets and precise motion control, balancing artistry and practicality.

- User-Friendly: Intuitive interface suits beginners and pros, with real-time previews enhancing efficiency.

- Safety: Built-in content moderation, with all videos tagged with C2PA metadata to prevent misuse.

Key Functions:

- Text-to-Video: Generates new videos from text prompts (e.g., “A unicorn running in a forest at night”).

- Image-to-Video: Converts static images into dynamic videos with natural motion.

- Video-to-Video: Adds styles or effects to existing videos (e.g., turning real scenes into cartoons).

- Advanced Editing Tools:

- Motion Brush: Controls motion in specific video areas.

- Frame Interpolation: Generates smooth videos from static images.

- Green Screen: Automatically removes or replaces backgrounds.

- Inpainting: Removes unwanted objects from videos.

- Lip Sync: Syncs text or audio with avatar facial movements.

- Act One: Generates character performances from driving videos and reference images.

- Templates and Collaboration: Provides project templates and supports real-time team collaboration.

Basic Tutorial: How to Operate Runway

Below are the basic steps for using Runway to create a video, suitable for beginners to get started quickly:

Step 1: Register and Log In

- Visit the official website runwayml.com.

- Click “Sign Up” to register using an email or Google/Apple account.

- Log in to the main dashboard; the free plan offers 125 credits (about 25 watermarked images or short videos).

Step 2: Create a New Video

- On the homepage, click “Create” or “New Project.”

- Choose a generation mode:

- Text-to-Video: Input a text prompt (e.g., “A flying car in a cyberpunk city”).

- Image-to-Video: Upload an image and add a description.

- Video-to-Video: Upload a video and input a style prompt.

- Set parameters: Select Gen-3 Alpha model, adjust duration (up to 18 seconds), resolution (up to 1080p), and aspect ratio.

Step 3: Edit the Video

- Refine Prompt: Add details in the text box (e.g., “Neon lights flashing, rainy day”).

- Style Adjustment: Choose preset styles (e.g., “cinematic” or “cartoon”).

- Enhance Details: Use Motion Brush for specific motion areas or Green Screen for background swaps.

- Preview: Click “Preview” to view the effect and tweak prompts or parameters.

Step 4: Generate and Export

- Click “Generate” and wait (seconds to minutes, depending on complexity and server load).

- View results in “Assets,” downloadable as MP4 (free version with watermark; paid version without) or shareable.

- Optimize using editing tools (e.g., Inpainting) and regenerate as needed.

Tips:

- Clear Prompts: Specific descriptions (e.g., “Low-angle shot, man walking down neon street”) work best.

- Experiment with Styles: Try presets for unique visuals.

- Check Quota: Free users should avoid lengthy generations to conserve credits.

Who Runway Is Suitable For

Runway’s functional design suits various user groups. Below are its primary target audiences:

Content Creators

- Use Case: YouTube videos, TikTok shorts, music videos.

- Reason: Quickly generates creative content with image animation and stylization, ideal for frequent updates.

- Typical Users: Vloggers, social media influencers, musicians.

Filmmakers and Video Producers

- Use Case: Storyboard creation, effect generation, preview edits.

- Reason: Multimodal generation and advanced tools speed up pre- and post-production.

- Typical Users: Directors, visual effects artists.

Marketers

- Use Case: Ad videos, product demos, brand promotions.

- Reason: Efficiently creates engaging content, with team collaboration and customization.

- Typical Users: Digital marketing specialists, brand managers.

Educators

- Use Case: Instructional videos, animated explainers, virtual classroom backgrounds.

- Reason: Converts text or images into dynamic content, enhancing teaching interactivity.

- Typical Users: Teachers, course developers.

Artists and Designers

- Use Case: Experimental art, digital works, proof-of-concept.

- Reason: Diverse styles and creative control suit new media exploration.

- Typical Users: Digital artists, graphic designers.

Not Suitable For:

- Professional Post-Editors: Lacks fine-grained editing compared to traditional software.

- Extremely Budget-Constrained Users: Free credits are limited; full features require payment ($15/month+).

- Simple Needs Users: Feature richness may overwhelm those needing basic tools.

Pictory

Pictory is an AI-powered tool that transforms text into video, helping users quickly create professional video content without complex editing skills or expensive equipment. It was developed by Pictory, a company founded in 2019, headquartered in Seattle, Washington, USA. Founders Vikram Chalana, Vishal Chalana, and Abid Mohammed bring extensive experience in software development, tech leadership, and growth strategy. Previously, they worked together at Winshuttle (an enterprise software company), building a strong technical foundation before launching Pictory to meet the growing demand for short-form video content.

As of March 2025, Pictory is a key player in AI video generation, serving global users, including marketers, educators, and content creators. Operating via a cloud platform with a subscription model, it has earned high ratings on G2 (4.7/5 in 2024). Pictory emphasizes user-friendliness and efficiency, using AI to lower video production barriers while supporting multilingual content for global needs.

Product Features and Key Functions

Pictory is known for its simplicity and strong content conversion capabilities. Below are its core features and key functions:

Features:

- Efficient Content Conversion: Quickly transforms text, blog posts, or URLs into videos, shortening production time.

- Realistic AI Voices: Offers over 600 AI-generated voices in multiple languages and accents, with natural fluency.

- Rich Media Library: Includes over 10 million royalty-free images, video clips, and music; users can upload custom materials.

- Auto-Subtitles: Generates subtitles automatically, boosting accessibility and watch time (studies show subtitled videos increase viewing by 12%).

- Brand Customization: Allows adding brand logos, colors, and fonts for content consistency.

Key Functions:

- Text-to-Video Generation: Converts scripts or text directly into videos, auto-matching visuals and voice.

- Blog-to-Video: Input a blog URL, and AI extracts key points to create a video.

- Video Editing: Edits video content via text, such as clipping segments or removing filler words (“um,” “ah”).

- Highlight Extraction: Auto-extracts short clips from Zoom, Teams, or podcast recordings for social media sharing.

- PPT-to-Video: Transforms PowerPoint presentations into dynamic videos.

- Voice Options: Supports custom voice uploads or AI voices for personalization.

- Team Collaboration: Allows multiple users to edit projects in real-time, enhancing team efficiency.

Basic Tutorial: How to Operate Pictory

Below are the basic steps for using Pictory to create a video, suitable for beginners to get started quickly:

Step 1: Register and Log In

- Visit the official website pictory.ai.

- Click “Get Started” or “Sign Up” to register using an email or Google account.

- Log in to the main dashboard; the free trial offers 3 videos per month (with watermark).

Step 2: Create a New Video

- On the dashboard, choose one of four main modes:

- Script to Video: Input a script.

- Article to Video: Paste a blog URL.

- Edit Video Using Text: Upload an existing video for editing.

- Visuals to Video: Upload images or short videos for a slideshow.

- For “Script to Video,” click, input a video title and script, then click “Proceed.”

Step 3: Edit the Video

- Script Adjustment: Edit text, with AI auto-splitting it into scenes.

- Select Template: Choose from preset templates (e.g., social media, tutorials).

- Visual Matching: AI adds stock images/videos, which can be manually replaced or supplemented with uploads.

- Voice Settings: Select an AI voice (language and gender options) or upload your own recording.

- Add Elements: Insert background music, subtitles, or brand logos.

- Preview: Click “Preview” to check the effect and adjust details.

Step 4: Generate and Export

- Click “Generate” (typically takes a few minutes).

- Download the MP4 file (free version with watermark; paid version without) or share directly.

- For adjustments, return to the editing interface, modify, and regenerate.

Tips:

- Script Optimization: Use short sentences for natural narration.

- Material Selection: Prioritize high-resolution custom materials.

- Preview Checks: Ensure subtitles sync with visuals.

Who Pictory Is Suitable For

Pictory’s functional design suits various user groups. Below are its primary target audiences:

Content Creators

- Use Case: YouTube videos, TikTok shorts, podcast visualizations.

- Reason: Quickly converts text or recordings into videos, with a rich media library enhancing appeal.

- Typical Users: Vloggers, social media influencers.

Marketers

- Use Case: Ad campaigns, product demos, social media promotions.

- Reason: Efficiently produces branded videos, with multilingual support for global marketing.

- Typical Users: Digital marketing specialists, e-commerce sellers.

Educators

- Use Case: Online courses, instructional videos, PPT presentations.

- Reason: Transforms course materials into dynamic content, with subtitles and voice enhancing teaching.

- Typical Users: Teachers, course developers.

Enterprise Users

- Use Case: Employee training, internal communication, customer support videos.

- Reason: Team collaboration and efficiency suit enterprise needs.

- Typical Users: HR teams, small business owners.

Budget-Constrained Beginners

- Use Case: Personal projects, low-cost promotions.

- Reason: Free trial and simple operation suit users without technical backgrounds.

- Typical Users: Students, freelancers.

Not Suitable For:

- Professional Filmmakers: Lacks complex editing and effects compared to traditional software.

- Extreme Creativity Seekers: Output leans toward standardization, less artistic than Runway.

InVideo

InVideo is an AI-based online video creation tool designed to help users quickly transform text, images, or other content into professional videos without complex editing skills or equipment. It was developed by InVideo, a company founded in 2017, headquartered in Mumbai, India. Founders Sanket Shah and Harsh Vakharia brought extensive tech and entrepreneurial experience, aiming to simplify video creation with AI to meet the rising demand for digital content.

As of March 2025, InVideo is a global leader in AI video generation, with over 7 million registered users across 190+ countries. Supporting multiple languages, it’s widely used in social media marketing, education, and corporate promotion. Operating on a subscription model with free and paid plans, InVideo secured $2.5 million in seed funding in 2020, led by Sequoia Capital India. It emphasizes user-friendliness and efficiency, enabling anyone to create high-quality videos easily.

Product Features and Key Functions

InVideo is known for its vast template library and AI-driven features. Below are its core features and key functions:

Features:

- Massive Template Library: Offers over 5,000 customizable templates for social media, business, education, and more.

- AI-Assisted Generation: Automatically generates videos from text input, simplifying creation.

- Multilingual Support: Supports over 40 languages with AI voices, suitable for global content.

- User-Friendly Interface: Drag-and-drop design, intuitive and accessible without editing experience.

- Rich Media Resources: Includes over 16 million royalty-free images, videos, and music, with custom uploads supported.

Key Functions:

- Text-to-Video Generation: Input a script or prompt, and AI matches visuals and voice to create a video.

- Article-to-Video: Paste a blog URL or article, and AI extracts key points for a video.

- Video Editing Tools: Supports trimming, cropping, adding transitions, subtitles, and animations.

- AI Voice Narration: Offers natural voices in multiple languages and accents, or custom audio uploads.

- Brand Kit: Applies brand logos, colors, and fonts with one click for consistency.

- Social Media Optimization: Supports various resolutions and formats (e.g., YouTube 16:9, Instagram 1:1).

- Team Collaboration: Allows real-time editing and feedback among multiple users.

Basic Tutorial: How to Operate InVideo

Below are the basic steps for using InVideo to create a video, suitable for beginners to get started quickly:

Step 1: Register and Log In

- Visit the official website invideo.io.

- Click “Sign Up” in the top-right corner to register using an email, Google, or Apple account.

- Log in to the main dashboard; the free plan offers 10 minutes of generation credit per month (with watermark).

Step 2: Create a New Video

- On the dashboard, click “Create a Video” or “New Project.”

- Choose a generation method:

- Blank Canvas: Start from scratch, manually input a script.

- Text to Video: Input a text prompt for AI to generate a video.

- Templates: Select from over 5,000 templates (e.g., YouTube intros, ads).

- Workflows: Choose preset flows (e.g., YouTube Shorts, article-to-video).

- Click “Continue” to enter the editing interface.

Step 3: Edit the Video

- Input Content: Enter text in the script box or paste a URL (article-to-video mode).

- Select Template or Style: Pick a template or adjust aspect ratio (e.g., 16:9, 9:16).

- Add Visuals: AI auto-matches stock images/videos, replaceable manually or with uploads.

- Voice Settings: Select an AI voice (language and gender options), adjust speed, or upload audio.

- Customize Elements: Add music, subtitles, brand logos, or use “Edit Magic Box” for commands (e.g., “remove scene”).

- Preview: Click “Preview” to check the effect and adjust details.

Step 4: Generate and Export

- Click “Export” (typically takes a few minutes).

- Download the MP4 file (free version with watermark; paid version without) or share to social media.

- For adjustments, click “Edit,” modify, and regenerate.

Tips:

- Clear Prompts: Specify details in text input (e.g., “Blue background, upbeat music”).

- Material Prep: Upload brand elements or high-quality materials beforehand.

- Format Check: Ensure output matches the target platform’s format.

Who InVideo Is Suitable For

InVideo’s functional design suits various user groups. Below are its primary target audiences:

Content Creators

- Use Case: YouTube videos, TikTok shorts, social media posts.

- Reason: Rich templates and fast generation suit creators needing frequent content.

- Typical Users: Vloggers, social media influencers.

Marketers

- Use Case: Ad campaigns, product demos, promotional videos.

- Reason: Brand customization and multilingual support aid global outreach, efficiently creating engaging content.

- Typical Users: Digital marketing specialists, e-commerce sellers.

Educators

- Use Case: Online courses, instructional videos, presentations.

- Reason: Converts text or PPTs into dynamic content, with voice and subtitles enhancing teaching.

- Typical Users: Teachers, course developers.

Enterprise Users

- Use Case: Employee training, internal communication, corporate promotions.

- Reason: Team collaboration and branding features meet enterprise needs.

- Typical Users: HR teams, small business owners.

Budget-Constrained Beginners

- Use Case: Personal projects, low-cost promotions.

- Reason: Free plan and intuitive interface suit users without technical backgrounds.

- Typical Users: Students, freelancers.

Not Suitable For:

- Professional Filmmakers: Lacks complex effects and fine editing features.

- Extreme Creativity Seekers: AI output leans toward standardization, less artistic than Runway.

Summary

In this article, we introduced nine mainstream AI video generation tools, each with distinct features suited to different audiences: Synthesia, Fliki, HeyGen, Colossyan, DeepBrain AI, Runway, Pictory, InVideo, and Sora (OpenAI). From Synthesia’s efficient professional avatar generation to Runway’s limitless creativity, from Fliki’s ease of use to Pictory’s content conversion expertise, each tool excels in specific domains. Whether enterprise users need training videos, content creators aim for social media hits, or artists explore visual innovation, these tools offer efficient, low-cost solutions. By understanding their backgrounds, features, and use cases, content creators can choose the most suitable tool based on their needs, rapidly enhancing video creation efficiency and quality.

Data References

- eMarketer, Worldwide OTT Video Viewers Forecast 2025

- Number of Digital Video Viewers Worldwide from 2019 to 2023

- Grand View Research, Digital Content Creation Market Size and Share Report, 2030

- Zebracat, Video Consumption Trends for 2025

- Additional Sources: Official TikTok and YouTube data were referenced, typically available via news releases or annual reports, without fixed links. Check their official sites (e.g., tiktok.com, about.youtube.com) for the latest statements.